The affordability of DeepSeek is a myth: The revolutionary AI actually cost $1.6 billion to develop

DeepSeek's new chatbot boasts an impressive introduction: "Hi, I was created so you can ask anything and get an answer that might even surprise you." This AI, a product of the Chinese startup DeepSeek, has quickly become a major player, even contributing to a significant drop in NVIDIA's stock price. Its success stems from a unique architecture and training methodology incorporating several innovative technologies.

Multi-token Prediction (MTP): Unlike traditional models predicting one word at a time, MTP predicts multiple words simultaneously, analyzing different sentence parts for improved accuracy and efficiency.

Mixture of Experts (MoE): This architecture utilizes multiple neural networks to process input data, accelerating training and enhancing performance. DeepSeek V3 employs 256 networks, activating eight for each token.

Multi-head Latent Attention (MLA): This mechanism focuses on crucial sentence elements, repeatedly extracting key details from text fragments to minimize information loss and capture subtle nuances.

DeepSeek initially claimed to have trained its powerful DeepSeek V3 neural network for a mere $6 million using 2048 GPUs. However, SemiAnalysis revealed a far more extensive infrastructure: approximately 50,000 Nvidia Hopper GPUs, including 10,000 H800s, 10,000 H100s, and additional H20s, spread across multiple data centers. This represents a total server investment of roughly $1.6 billion, with operational expenses estimated at $944 million.

DeepSeek, a subsidiary of the High-Flyer hedge fund, owns its data centers, unlike many startups relying on cloud services. This provides greater control over optimization and faster innovation implementation. The company's self-funded nature enhances flexibility and decision-making speed. Furthermore, DeepSeek attracts top talent, with some researchers earning over $1.3 million annually, primarily from Chinese universities.

While the initial $6 million training cost claim appears unrealistic—referring only to pre-training GPU usage and excluding research, refinement, data processing, and infrastructure—DeepSeek has invested over $500 million in AI development. Its compact structure facilitates efficient innovation implementation compared to larger, more bureaucratic companies.

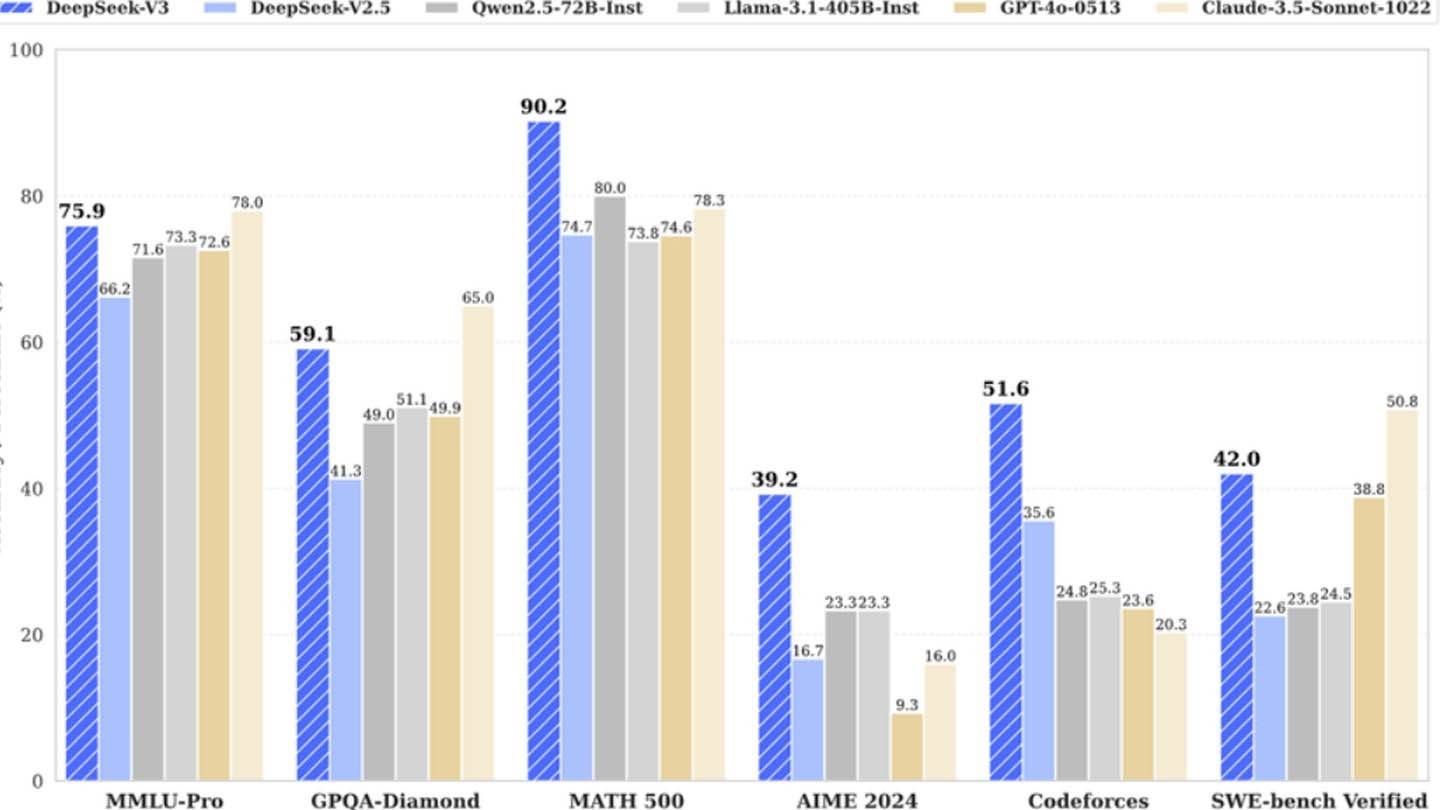

DeepSeek's success highlights the competitive potential of well-funded, independent AI companies. However, its achievements are rooted in substantial investment, technological breakthroughs, and a strong team, making the "revolutionary budget" claim an oversimplification. Despite this, DeepSeek's costs remain significantly lower than competitors; for example, its R1 model training cost $5 million, compared to ChatGPT 4's $100 million. However, it’s still cheaper than its competitors.

-

Spring is almost here, and the new season brings with it a fresh round of sales events. For PC gamers, major discounts are now available on Steam, Fanatical, and Green Man Gaming for their respective Spring promotions. If you've been waiting for a poAuthor : Hunter Jan 12,2026

-

The worlds of Monster Hunter are about to collide. The crossover event between Monster Hunter Now and Monster Hunter Wilds is arriving soon. Officially called the MH Wilds Collab Event I, it begins on February 3rd at 9:00 a.m. and continues through MAuthor : Claire Jan 11,2026

- Wuthering Waves: Uncover the Secrets of Whisperwind Haven's Palette

- Roblox Simulator Codes: Unlock Exclusive Rewards!

- Mastering Two-Handed Weapons in Elden Ring: A Guide

- Top 25 Palworld Mods to Enhance Your Game

- Ultimate Guide to Shinigami Progression in Hollow Era

- HoYo Fest 2025: Fresh Updates on Comeback